Speed Running CUDA in 1 Month

I spent the last month speed running CUDA by working through CUDA by Example by Jason Sanders and Edward Kandrot.

It turned out to be a really fun book. I didn’t have access to their header files, so I rebuilt most of the visuals from scratch using SDL2 instead of relying on their prebuilt graphics engine. That made things slower at times, but I learned way more by doing it myself.

Here are some things I picked up along the way:

1. GPU hardware matters, but CUDA helps

Every GPU has its own compute power and quirks, but CUDA does a pretty good job of abstracting the details so you can focus on programming.

2. The general GPU architecture

Think of it as a ton of cores, each with access to L1 and L2 cache, all sharing a few global memory blocks. How you use that shared memory makes or breaks performance.

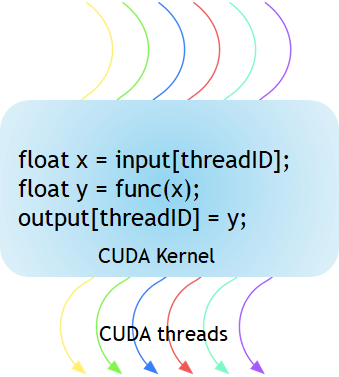

3. The CUDA programming model

It’s simple at a high level: move data to the GPU, launch a kernel function, let the GPU crunch, and move results back. The hard part is doing this efficiently.

4. Threading and shared memory

Threads are cheap on GPUs, but memory access isn’t. Using shared memory well can make kernels orders of magnitude faster.

5. Constant memory

Great for small read-only values that every thread needs. Saves you from hitting slower global memory repeatedly.

6. Texture memory

Originally for graphics, but still really useful in general-purpose code when you need fast cached lookups.

7. Atomics and streams

Atomics let you safely update shared values across threads, and streams help you overlap data transfer with computation. Together, they’re essential for getting the most out of CUDA.

Learning CUDA in a month was intense, but totally worth it. I feel like I only scratched the surface, but now I have a solid foundation to build from.

Also, here's a gif of me speeding a Julia set visualizer I built with SDL2 using CUDA:

And here's the full repo with all the work: